This article details my adventures in recovering teletext data from VHS tapes. I'm just starting out and still figuring out what to do, so please don't take this as an expert guide to teletext recovery. However I hope that by writing down the hurdles I've faced and what I've learnt while it's still fresh in my mind I can create the beginner's guide I wish I'd had, and document my future experiments.

At the heart of all teletext recovery is the vhs-teletext software by Alistair Buxton.

This suite of scripts works by capturing raw samples of the vertical blanking intervals from a video signal using a video capture card on Linux. In a clean broadcast quality television signal these signals would have clearly defined bits, but as the bandwidth of a VHS recording is lower than that of broadcast television the individual teletext bits are blurred together and can't simply be fed into a normal teletext decoder.

The vhs-teletext repository explains the recovery principle in more detail, but essentially it looks at the smeared grey signal and attempts to determine what pattern of bits was originally transmitted to result in that output after the filtering in the VHS recording process.

The original version of the software used a 'guessing algorithm', but the current 'version 2' software works by matching patterns to a library of pre-generated blurry signals. This approach enables the use of GPU acceleration on a compatible Nvidia graphics card which reduces the time taken to process a recovery enormously.

My setup

Ideally you would install a suitable graphics card into your video capturing PC and do the entire process under Linux. I only own one compatible graphics card and it is installed in my main Windows 7 PC which I don't want to dual boot, so I am capturing the signals on a capture PC and then pulling them over the network to process them.

The recovery software required some hacking to be able to run on Windows at all, and getting the environment set up with the Nvidia CUDA SDK and all the required python modules was somewhat awkward so I recommend sticking to Linux for the whole process if you can.

For my initial attempts at VHS teletext recovery I fed composite video from an old Saisho VRS 5000X that has a simple phono composite output into an ancient Pentium 4 PC via a Mercury TV capture card (Phillips SAA7134 chip) which necessitated writing a new config file for vhs-teletext and modifying some of the scripts slightly to handle the fact that this chip outputs 17 VBI lines per field instead of 16. This produced promising but disappointing results. The capture script showed huge numbers of possible dropped frames.

General wisdom dictates that a higher sample rate Brooktree/Conexant 8x8 based chipset is required, so I ordered a couple of suitable cards from ebay and while waiting for those I renovated another broken PC to use as a VHS capturing box.

Suitable Hauppauge WinTV capture cards can be identified by compating their model number to this webpage. There are also the PCI Hauppauge ImpactVCB cards (NOT PCI-e models).

As soon as the new card arrived I installed it and ran a capture of my best tape in the new rig. The results were disappointingly similar to before.

After some fruitless fiddling with settings in the recovery config file I decided that there was too much interference on the video signal and no amount of messing with settings would be able to reliably extract teletext. Adjusting the settings would make some lines improve but make others worse. The data was there to be extracted but no setting could do it all.

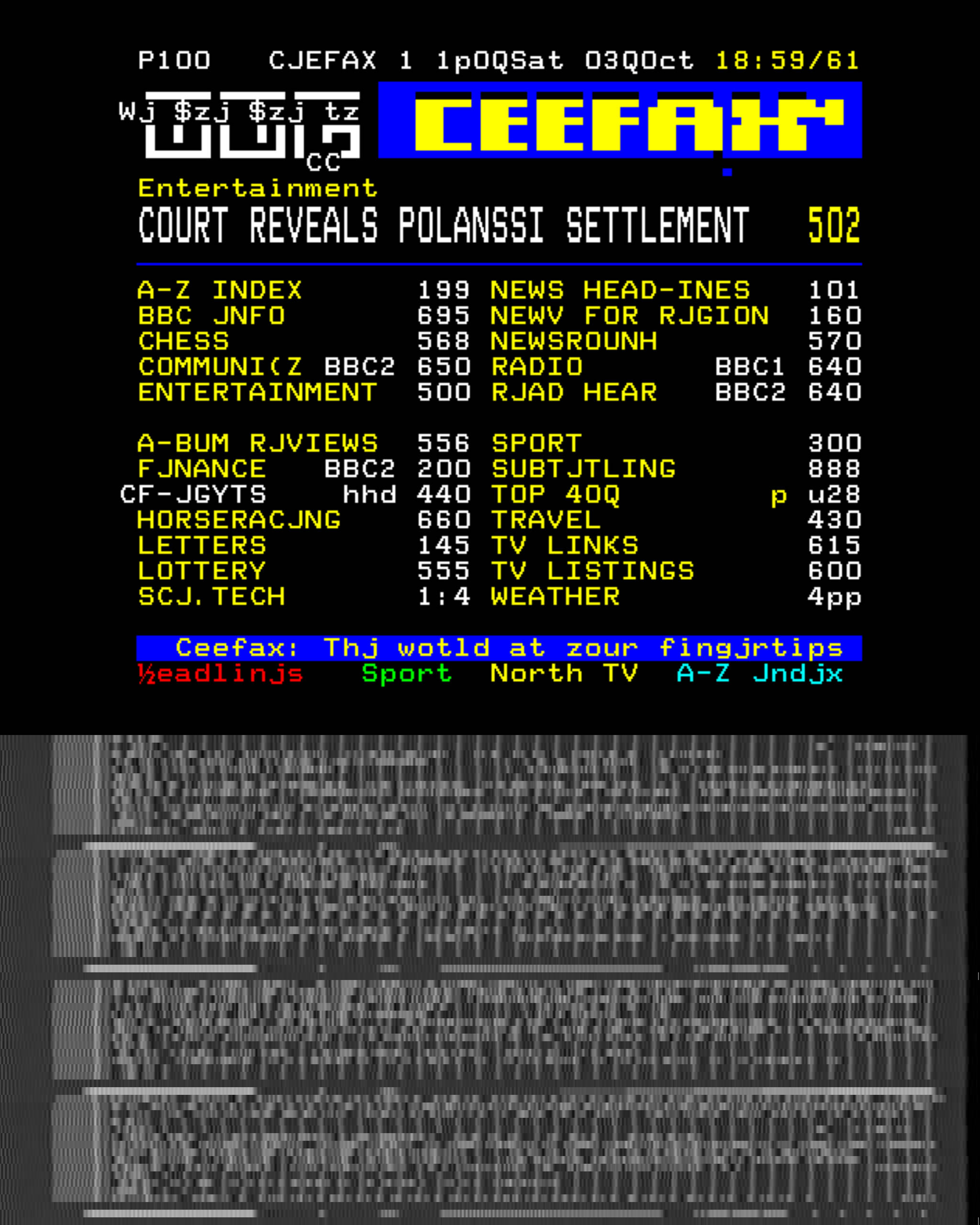

At this point I abandoned the Saisho VCR and made up an appropriate SCART to phono composite cable to use my newer Samsung SV-661B. I ran the same tape again through that and the difference is obvious. The resulting output after deconvolution is almost error free and can be played back as a teletext signal as-is with very few errors.

The two images show the output of the two VCRs along with a small sample of the raw blanking interval signal. I believe that the strong periodic interference disrupts the recovery script's ability to discern the exact position of the clock run in and framing code, and therefore can't calculate the correct offset to each subsequent data bit.

I am now using the following hardware.

Capture machine:- Intel Core2Duo E6600 CPU

- Hauppauge ImpactVCB 64405 PCI video capture board (Conexant Fusion 878A chip)

- Debian 9

- Intel Core i7-3820 CPU

- Nvidia GTX 660 graphics card

- Windows 7

Ongoing updates

2018-12-22

I have created a fork of the vhs-teletext software with modified code I am using on Windows. I have also begun butchering the scripts further to begin supporting more packet types (currently only the t42 processing scripts. The deconvolved data will not be useful.)

2018-12-23

Created a script to slice a squashed recovery into its constituent subpages as a directory of separate .t42 files.

2018-12-27

Further experiments show that the 878A chipset card works fine on the slower Pentium 4 PC with no dropped frames. Testing the saa7134 chipset card into the Core2Duo PC was inconclusive as an examination of the captured vbi data shows a curious lack of horizontal sync for the first few lines of each field.

2018-12-30

Created a three hour pattern training tape using raspi-teletext on a spare Raspberry Pi with the output from training --generate.

After capturing the vbi data back into the capture PC as training.vbi I processed it with training --train training.vbi > training.dat.orig. I captured a full three hours of the training signal which resulted in a 43 GB intermediate training file.

I split the intermediate file using training --split training.dat.orig which generates 256 files named training.nn.dat where nn is two hexadecimal digits.

A small windows batch script iterated over the 256 .dat files executing training --sort training.nn.dat followed by training --squash training.nn.dat.sorted and finally joining all the resulting files training.nn.dat.sorted.squashed into a single training.dat.squashed.

Finally I was able to generate the three pattern data files with training --full training.dat.squashed, training --parity training.dat.squashed, and training --hamming training.dat.squashed.

I don't believe that creating my own Hamming and parity patterns was necessary and has any measurable effect on the quality of the recovery process, however it was necessary to generate a full.dat pattern file for 8-bit data recovery experiments as that is not included in the original distribution of the software.

Some modifications to teletext/vbi/line.py allows the deconvolution process to recover the 8-bit data in the Broadcast Service Data Packet and any datacast packets, and also treat Hamming 24/18 coded data as 8-bit data, as the code previously recovered everything as 7-bit text with parity resulting in additional corruption.

2018-12-31

After wasting the previous evening and staying up until the early hours of the morning doing futile experiments in an attempt to implement a better process for matching Hamming 24/18 coded bits, I came up with another idea for recovering the data in enhancement packets.

With the implementation of 8-bit decoding of the 39 bytes which make up the Hamming 24/18 coded data I was now able to extract these packets with only the expected occasional errors inherent in the recovery process. These results were already promising with the standard squashing algorithm, but still not error free on any of the level 2 pages I have found on my tapes so far.

I noticed while looking at the unsquashed recovered data in a hex editor and my Teletext Packet Analyser script, that in general all the enhancement triplets were recovered without errors at least once, but because the squashing process simply does a modal average it was picking damaged ones in the squashed output.

Also, because it averages bytewise, it would pick bad bytes within a triplet. The 24 bits of coded triplet data are one value and should be treated as a single unit

My solution was to order the 74 possible packets which can be associated with a subpage (X/0, X/1-X/25, X/26/0-X/26/15, X/27/0-X/27/15, and X/28/0-X/28/15) such that the ones which can be considered bytewise are separated from the ones containing triplet data.

I created a new to_triplets function in the packet class which takes the 42 bytes and converts them to fourteen 24-bit words. This includes the Magazine Row Address Group and Designation Code, which are treated as the first 24 bit value.

For the remaining 13 words the code also performs a Hamming 24/18 decode, and if there are any errors returns NaN. This allows the modal average performed on these packets to disregard and triplet which contains an error and find the most common error free triplet. This enables it to create a composite of the working parts of many damaged copies of the packet.

If there are no completely error free copies of a triplet in the deconvolved data this will introduce a new error by omitting the triplet entirely. It is possible to only reject unrecoverable errors found in the Hamming decode, bit I found this to produce worse output than also rejecting recoverable errors.

Where no error free copy of a triplet is found it may be possible that one of the copies with a recoverable error is sufficient to repair the packet, but I haven't created any code to do this automatically yet.

2019-12-15

I have neglected this page over the past year, and there have been many developments to the vhs-recovery software in that time. This renders some of my previous updates and fork of the ‘v2’ code irrelevant.

The current state of the scripts is that the new ‘v3’ version of the scripts for Python3 runs on Windows ‘out-of-the-box’, deconvolves and squashes more packet types, decodes broadcast service data packets, and includes a split command which can export a single file per subpage.